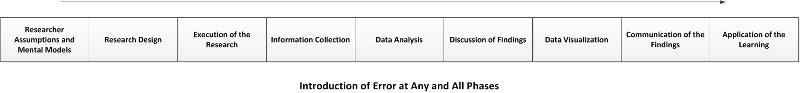

Collecting Relevant Information

Proper Research Approaches, Proper Metrics

The table below lists some of the common research methods in higher education... This is not an exhaustive listing by any means. This is included just to spark some thinking about some of the more formalized methods of research.

|

Quantitative (Research) Methods |

Qualitative (Research) Methods |

Mixed (Research) Methods |

|

empirical experiments structured records reviews surveys / questionnaires historical research and analysis

(based on a range of statistical analysis methods) |

fieldwork (in a natural setting) Delphi study grounded theory heuristic research interviewing surveys / questionnaires case studies portraiture historical research and analysis

(emergent vs. pre-determined research)

|

a combination

meta-analyses (qual, quant and mixed methods)

(a mix of information gathering and statistical analysis) |

Addressing Threats to Research and Information Validity

Applied Information Basics

Shape: Almost all information has some "shape" or pattern. Very little is formless. Very little is actually and totally random (except when it is, according to author Nassim Nicholas Taleb).

Absence / Presence: What is seen may be relevant. What is not seen in the research may be relevant.

Surveillance: Understanding a situation is about surveillance--regular intervals (or constant) observations and measures. From this, an observer establishes a baseline. From this, an observer may notice anomalies.

Context for Meaning: Information is about context. Outside of context, information may lack relevance.

Framing and Interpretation: Information is about focus. It is about point-of-view. It is about assumed values. Information is about (subjective) interpretation. (This is why it's helpful to game-out various points-of-view and possible interpretations of the same set of information.) People make inferences about raw data and in so doing process it into usable information.

A Framing Animation

Predictive Analytics and Hidden Information: Predictive analytics is about trendlines. It is about human habituation and predictability of future actions based on data points. It is about hidden information or an extension of what is non-intuitively knowable. (See Charles Duhigg's "The Power of Habit," 2012.)

Modeling: All simulations are based on underlying information. Without knowing the assumptions of the model, one cannot truly assess whether the model has any value. Beyond that, a model has to track with the factual world, so there have to be points where the model's predictions may be tested against the world.

Omniscience: Competition in information is about knowing something critical without others knowing. It is about knowing earlier. It is about situational awareness.

Following the Rules

- Follow the standards of the domain field.

- Adhere to ethical guidelines about research, particularly any research that involves humans or animals...or any sort of risk.

- Ensure that all participants in your research have been duly notified (through informed consent).

- Maintain confidentiality of all data.

- Store the data for the required time period.

Creating and Deploying Effective Surveys

Ensure that all survey instruments are phrased so as to avoid bias. (Question Design)

Phrase questions to focus on one issue at a time. Avoid double-barreled questions.

Ensure that surveys reach a sizeable random sample of a population, not an accidental defined sub-set. (Survey Methodology)

Ensure that all surveys questions used have been tested for multi-collinearity (to eliminate sample items that measure similar things). It is important to avoid conflating very similar factors. The variables being tested in a context should be as different and independent from each other as possible. If there is overlap, there will be unnecessary "noise" in the data.

Analysis and Decision-making

Not Over-Reaching in Terms of Generalizability

- Small sample size (as in case studies with n = 1 or few)

- Insufficient sample size in terms of percentage of the target population

Do not over-generalize. Draw conclusions only in proper measure.

Do not confuse correlation with causation. (Just because two events occur in close time proximity does not mean that there is necessarily a causal relationship between them.)

Make sure there is sufficient information before making an important decision. (This is all about due diligence.)

Sometimes, researchers are observing and assessing one thing but confusing it with another. There's a risk to conflation especially if the two compared objects are potentially close in relationship. The tests for "multi-collearity" is an attempt to separate the measures for one factor from another potentially closely related factor.

Coding Data Methodically

Coding data appropriately

Maximizing the Data Collection to Saturation

Building a strong repository of complete and relevant information for the "triangulation" of data

Breaking "The Law of Small Numbers"

If a sample size is too small, the findings will be much more exaggerated (with more extremes and outlier effects) than for larger samples. Too often, analysts read causal observations when the "null hypothesis" cannot actually be rejected (Kahneman, 2011, p. 118).

Applying Logic

Avoid endogeneity. Do not confuse a cause-and-effect when the effect could be coming from a factor within the system instead of outside the system. Or there may be factors affecting a result that is not even considered in the modeling.

Consider all possible interpretations of the data.

Applying the Base Rate

Statistics can be helpful in aiding an analyst in understanding the probabilities in a particular circumstance. A "base rate" is the general statistical probability for a particular occurrence. A "base rate fallacy" occurs when people go with judging an outcome by considering irrelevant information and without considering basic probabilities (Kahneman, 2011).

Avoiding the WYSIATI (What You See is All There Is) Fallacy

Kahneman (2011) talks about the fallacy of "What You See is All There Is" (WYSIATI). People do not often consider the "unknown unknowns" or unseen evidence. An analyst should consider all evidence on a wide spectrum so as not to close off an exploration too soon. Fixing too soon on a possible explanation may be misleading.

Triangulating Data

Compare various data streams to get a fuller view of an issue. The more you will be generalizing and / or the higher the risk of the judgment, the more due diligence has to be done.

Information Sampling over Time

Sometimes, researchers will only do a slice-of-time sample and yet extrapolate results that might imply continuous time. It may help to sample over longer time periods for greater generalizability.

Go with the Counter Narrative

Test out other points of view and data sets. Work hard to debunk your own stance or interpretation. Dis-believe. (This stance can either strengthen your stance or weaken it.)

Be Leery of "Cognitive Ease"

Be aware of the ease of accessing certain explanations because that ease-of-access often translates to an early explanation and certitude which may be incorrect. Kahneman (2011) talks about the risks of "cognitive ease" in analysis and decision-making. Further, he describes two cognitive systems in people. System 1 is automatic and quick and activates with "no sense of voluntary control." It is intuitive and tends towards gullibility. (p. 20) System 2 is used for complex computations. It tends towards dis-believing. This system is much more logical and analytical; it is linked with "the subjective experience of agency, choice, and concentration." (p. 21) Too often, people go with System 1 when they should be applying System 2. System 1 is easier to access than System 2, and it requires less cognitive focus.

The "Black Swan" Rebuttal

(This is an image of two black swans released through Creative Commons licensure.)

A Rebuttal Stance against Quantitative Data, Bell Curves, and Decision-making Based on Too-Little Information: Nassim Nicholas Taleb's "Black Swans"

Black Swans

"Black Swan" events are outlier events. They are rare but high-impact events that fall outside paradigms and outside bell curves (so-called "normal distribution" curves). Thinking too much within paradigms and bell curves makes black swans even more inconceivable, which leaves people unprepared for such outsized events.

The Explanatory Value of "Black Swans"

"A small number of Black Swans explain almost everything in our world, from the success of ideas and religions, to the dynamics of historical events, to elements of our own personal lives. Ever since we left the Pleistocene, some ten millennia ago, the effect of these Black Swans has been increasing. It started accelerating during the industrial revolution, as the world started getting more complicated, while ordinary events, the ones we study and discuss and try to predict from reading the newspapers, have become increasingly inconsequential" (Taleb, 2010, p. xxii). The world is about "non-linearities," non-routines, randomness, and true serendipity.

Other Problems with Current Uses of Data

- People tend to underestimate the error rate of their forecasts.

- People do not consider "anti-knowledge" or their missing information. People should acquire a huge anti-library of works that they've never read, so they can start getting busy learning. People need to be much more dissatisfied with their basis of knowledge because people know so little, as a rule.

- People tend to focus on small details and not abstract meta-rules.

- People tend towards non-thinking. Human predecessors "spent more than a hundred million years as nonthinking mammals" (Taleb, 2010, p. xxvi). Rather, they should strive towards "erudition" and satisfying "genuine intellectual curiosity."

- The social reward structures do not reward people to think preventively against major disasters but rather reward those who come in afterwards to make changes. (He uses 9/11 as an example.)

- People tend to suffer the "triplet of opacity": (1) the illusion of understanding; (2) the retrospective distortion (how history seems to make so much more sense in retrospective analysis); (3) the "overvaluation of factual information and the handicap of authoritative and learned people, particularly when they create categories--when they 'Platonify.'" (Taleb, 2010, p. 8), (Taleb suggests that Plato over-simplified the world by using general themes when that undercut the true complexity of the world. The "bell curve" is an oversimplification of the world in the sense that it is one frame on a complex reality.)

- Those with a shared framework of analysis will come out with generally the same (often erroneous) analyses. It's preferable to have a broad range of analysts using different conceptual and mental models...and to encourage diversities of opinion.

- Inductive logic is highly limiting. He gives an example of a turkey who is fed faithfully 1,000 days but on the 1001st day is slaughtered. The Black Swan is a "sucker's problem," for people who are too willing to draw conclusions too early and on too little information.

- There is no long run (what is called "the asymptotic property" or the extension of the present into infinity). All reality is based on the short-term. The long-term is not predictable...by its very nature.

- People should not pursue verification of their theories. They should pursue the debunking of their ideas. They should cull evidence to show the opposite of what they think to truly test their theories. They should strive to find evidence that would prove themselves wrong (disconfirming evidence). They should cultivate a healthy skepticism.

- People tend to fall into a narrative fallacy--of over-interpreting by telling simple stories to bind unrelated facts together. People tend to reduce complexity through "dimension reduction," so they can feel in better control of complexity.

- Human memory is selective and dynamic. It remembers information in a malleable way and is not fully trustworthy.

Not Bell Curves: Taleb calls the use of "Gaussian bell curves" a kind of "reductionism of the deluded." The bell curve exists in a theoretical space, not in the real; it does not exist outside the Gaussian family. A "random walk" considers possibilities, but it has severe limits--the limits of the analytical framework.

not

not

(A fractal by Wolfgang Beyer, released through Creative Commons licensure; a generic bell curve or "normal distribution")

The Limits of Mathematization

"Furthermore, assuming chance has anything to do with mathematics, what little mathematization we can do in the real world does not assume the mild randomness represented by the bell curve, but rather scalable wild randomness. What can be mathematized is usually not Gaussian, but Mandelbrotian." (Taleb, 2010, p. 128)

Fractals and Mandelbrotian Randomness

" Fractal Is a word Mandelbrot coined to describe the geometry of the rough and broken—from the Latin fractus, the origin of fractured. Fractality is the repetition of geometric patterns at different scales, revealing smaller and smaller versions of themselves. Small parts resemble, to some degree, the whole." (Taleb, 2010, p. 257)

Exponentially Large Scalable Effects and the Rationale for Fractals

The real world has non-linearities that are not accounted for in bell curves. Real-world challenges mean that events may have scalable effects that magnifying effects. Taleb writes: "Like many biological variables, life expectancy is from Mediocristan, that is, it is subjected to mild randomness. It is not scalable, since the older we get, the less likely we are to live. In a developed country a newborn female is expected to die at around 79, according to insurance tables. When she reaches her 79th birthday, her life expectancy, assuming that she is in typical health, is another 10 years. At the age of 90, she should have another 4.7 years to go. At the age of 100, 2.5 years. At the age of 119, if she miraculously lives that long, she should have about nine months left. As she lives beyond the expected date of death, the number of additional years to go decreases. This illustrates the major property of random variables related to the bell curve. The conditional expectation of additional life drops as a person gets older.

"With human projects and ventures we have another story. These are often scalable...With scalable variables, the ones from Extremistan, you will witness the exact opposite effect. Let's say a project is expected to terminate in 79 days, the same expectation in days as the newborn female has in years. On the 79th day, if the project is not finished, it will be expected to take another 25 days to complete. But on the 90th day, if the project is still not completed, it should have about 58 days to go. On the 100th, it should have 89 days to go. On the 119th, it should have an extra 149 days. On day 600, if the project is not done, you will be expected to need an extra 1,590 days. As you see, the longer you wait, the longer you will be expected to wait." (Taleb, 2010, p. 159).

Fractals as the Framework

"Fractals should be the default, the approximation, the framework. They do not solve the Black Swan problem and do not turn all Black Swans into predictable events, but they significantly mitigate the Black Swan problem by making such large events conceivable." (Taleb, 2010, p. 262)

Accounting for "Fat Tails": C. Steiner (2012) suggests that it may be better to consider that there is unpredictability with human irrationalities...which may result in "fat tails." These are the ends of the bell curve, which theoretically stretch into infinity.

Really, One Cautionary Note

References

Kahneman, D. (2011). Thinking, Fast and Slow. New York: Farrar, Straus and Giroux.

Steiner, C. (2012). Automate this: How algorithms came to rule our world. New York: Portfolio / Penguin.

Taleb, N.N. (2010/2007). The black swan: The impact of the highly improbable. New York: Random House.